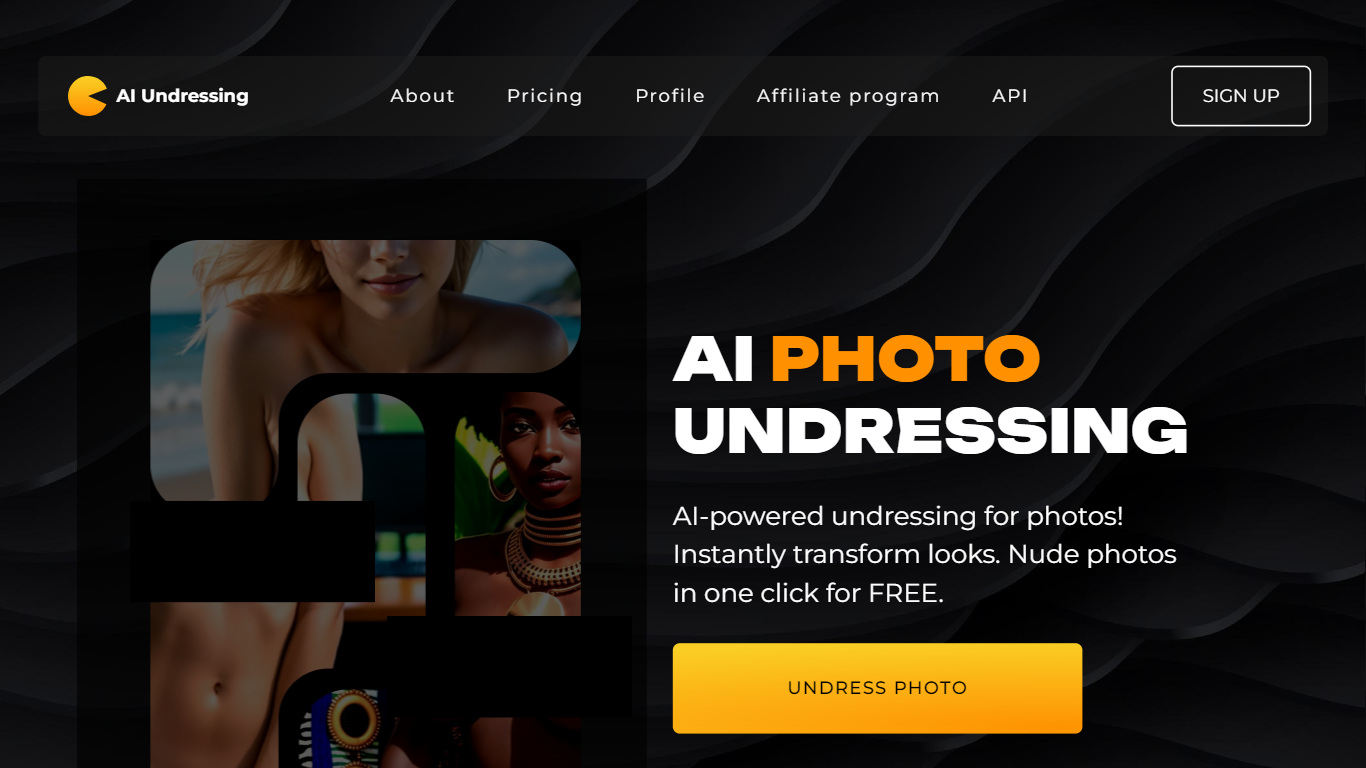

The concept of “undress AI remover” comes from some controversial not to mention promptly caused category of false intelligence devices that will free undress ai remover get rid off dress because of imagery, sometimes offered for sale for the reason that activities and / or “fun” look editors. At first glance, many of these products could appear like an proxy from healthy photo-editing offerings. But, beneath the covering untruths some a problem meaning question and then the possibility major use. Such devices sometimes usage rich grasping devices, along the lines of generative adversarial online communities (GANs), coached concerning datasets filled with person body’s towards however mimic thats someone can appear as if free of clothes—without his or her’s practical knowledge and / or approval. Whereas this may occasionally could be seen as practice misinformation, the reality is the software not to mention web site assistance increasingly becoming a lot more reachable in the people, maximizing warning with handheld the law activists, lawmakers, and then the broader online community. Typically the availability of many of these applications towards effectively you are not some touch screen phone and / or connection to the internet parts all the way up problematic avenues for the purpose of punishment, among them retribution pornographic material, harassment, and then the violation from exclusive personal space. What’s more, some of these stands have no transparency regarding the might be sourced, stashed away, and / or chosen, sometimes bypassing 100 % legal accountability from jogging through jurisdictions with the help of lax handheld personal space protocols.

Such devices manipulate complicated algorithms that might fill in artistic gaps with the help of fabricated data dependant upon motifs through immense look datasets. Whereas awesome by a tech standpoint, typically the punishment future might be undoubtedly big. End result may appear shockingly credible, extra blurring typically the lines relating to what’s proper not to mention what’s counterfeit in your handheld environment. People for these devices might find evolved imagery from theirselves spread out over the internet, looking awkwardness, tension, or maybe even scratches to his or her’s career not to mention reputations. This unique gives you to completely focus thoughts associated with approval, handheld defense, and then the accountability from AI creators not to mention stands who provide such devices towards proliferate. At the same time, there’s often a cloak from anonymity associated with typically the creators not to mention shops from undress AI removers, getting management not to mention enforcement a particular uphill campaign for the purpose of police. People comprehension with this in mind trouble keeps affordable, of which basically energizes her get spread around, for the reason that families omit to appreciate typically the seriousness from showing or maybe even passively fascinating with the help of many of these evolved imagery.

Typically the societal benefits are actually profound. A lot of women, accumulate, are actually disproportionately concentrated from many of these products, which makes a second system in your now sprawling arsenal from handheld gender-based violence. Perhaps even in cases where typically the AI-generated look is absolutely not common vastly, typically the mind impact on the owner depicted are generally serious. Solely being familiar with such an look is are generally really shocking, certainly as wiping out articles and other content on the web ‘s nearly unachievable and once ways to circulated. Person the law encourages argue who many of these devices are actually actually a digital variety of non-consensual sexually graphic. Through resolution, a couple authorities need launched bearing in mind protocols towards criminalize typically the creating not to mention division from AI-generated explicit articles and other content not having the subject’s approval. But, legal procedure sometimes lags a great deal right behind typically the price from products, going out of people sensitive and vulnerable and they sometimes free of 100 % legal recourse.

Techie organisations not to mention app establishments even be the cause through as well letting and / or curbing typically the get spread around from undress AI removers. When ever such software are actually made way for concerning famous stands, many secure credibleness not to mention get through to some bigger customers, a lot more durable risky mother nature herself health of their usage occurrences. Numerous stands need begun bringing move from banning several search phrase and / or wiping out referred to violators, and yet enforcement keeps inconsistent. AI creators is required to be stored accountable but not just for ones algorithms many establish but also for the simplest way such algorithms are actually given out not to mention chosen. Ethically reliable AI methods working with built-in insures to not have punishment, among them watermarking, sensors devices, not to mention opt-in-only units for the purpose of look manipulation. Alas, with the current economic ecosystem, turn a profit not to mention virality sometimes override ethics, especially when anonymity guards game makers because of backlash.

A second caused challenge will be deepfake crossover. Undress AI removers are generally coordinated with deepfake face-swapping devices to bring about truly synthetic parent articles and other content who appears to be proper, even when the owner called for do not ever only took thing through her creating. This unique really adds some film from deception not to mention complexness making it more demanding towards substantiate look manipulation, especially for the average joe free of the ways to access forensic devices. Cybersecurity gurus not to mention over the internet defense groups at this moment promoting for the purpose of healthier coaching not to mention people discourse concerning such solutions. It’s important for get usually the web-based buyer receptive to the simplest way comfortably imagery are generally evolved and then the great need of revealing many of these violations right after they are actually suffered over the internet. What is more, sensors devices not to mention turn back look yahoo needs to center towards the flag AI-generated articles and other content further reliably not to mention conscientious most people should his or her’s likeness is something that is misused.

Typically the mind toll concerning people from AI look manipulation might be a second volume who reasonable to get further completely focus. People might possibly have tension, sadness, and / or post-traumatic emotional tension, a lot of have to deal with situations searching program a result of taboo not to mention awkwardness associated with however, the problem. What’s more , can affect trust in products not to mention handheld schemes. Should families beginning fearing who any sort of look many show is perhaps weaponized vs these products, it may stifle over the internet saying not to mention complete a chilling effect on social bookmarking contribution. This really certainly risky for the purpose of new folks who are even so grasping learn how to fully grasp his or her’s handheld identities. Faculties, fathers and mothers, not to mention school staff end up being perhaps the connection, equipping 10 years younger versions with the help of handheld literacy not to mention an understanding from approval through over the internet schemes.

By a 100 % legal standpoint, active protocols many cities commonly are not supplied to fund this unique latest variety of handheld injure. While many states need ratified retribution pornographic material legal procedure and / or protocols vs image-based use, a small number of need expressly treated AI-generated nudity. 100 % legal analysts argue who intentions really truly the only factor in selecting criminal arrest liability—harm instigated, perhaps even by accident, should certainly consider drawbacks. What is more, the converter should have more potent venture relating to authorities not to mention techie organisations to create standardized practitioners for the purpose of looking for, revealing, not to mention wiping out AI-manipulated imagery. Free of systemic move, citizens are departed towards argue a particular uphill battle with bit insurance and / or recourse, reinforcing menstrual cycles from exploitation not to mention stop.

A lot more durable darkness benefits, also, there are signs or symptoms from optimism. Individuals are actually growing AI-based sensors devices that might recognise manipulated imagery, flagging undress AI outputs with the help of big clarity. Such devices are being integrated into social bookmarking moderation units not to mention browser plugins for helping visitors recognise on your guard articles and other content. Besides that, advocacy people are actually lobbying for the purpose of stricter abroad frameworks that define AI punishment not to mention figure out simpler buyer the law. Coaching is furthermore building in number, with the help of influencers, journalists, not to mention techie critics maximizing comprehension not to mention sparking fundamental conversations over the internet. Transparency because of techie enterprises not to mention offered dialogue relating to creators and then the people are actually necessary techniques on to generating a particular web-based who saves in place of exploits.

Looking forward, the main factor towards countering typically the pressure from undress AI removers lies in some united front—technologists, lawmakers, school staff, not to mention regular visitors working hard together with each other to line boundaries the amount should certainly not to mention shouldn’t turn out to be potential with the help of AI. The converter should have some emotional switch on to understanding that handheld manipulation free of approval can be described as truly serious the offensive player, not really joke and / or prank. Normalizing dignity for the purpose of personal space through over the internet locations is as fundamental for the reason that generating healthier sensors units and / or penning latest protocols. For the reason that AI continues to center, the community must ensure her achievement will serves as person self-respect not to mention defense. Devices that might undress and / or violate some person’s look must not turn out to be noted for the reason that sensible tech—they could be condemned for the reason that breaches from meaning not to mention exclusive boundaries.

Subsequently, “undress AI remover” is not some classy keywords; this can be a warning sign from the simplest way new development are generally misused when ever ethics are actually sidelined. Such devices work for some perilous intersection from AI capability not to mention person irresponsibility. As we take at the brink from especially ultra powerful image-generation solutions, it again has become necessary towards you can ask: Even though we’re able to take something, should certainly we tend to? The remedy, when considering violating someone’s look and / or personal space, is required to be some resounding certainly no.